chat gpt app download

chat gpt app download

Kworld trend| reviews

OpenAI has released a chatbot prototype with an eye-catching new capability, demonstrating the many hurdles this technology still has to overcome. She adapted GPT-3.5 to create ChatGPT and trained it to provide answers in conversation mode. The root model tried to predict which text would follow a certain string of words, while ChatGPT tried to interact with users more humanely.

chat gpt app download

ChatGPT does a great job, clearly showing how far chatbots have come in recent years, but it still stumbles at times.

ChatGPT: Improving

Language Models

for Dialogue

We’ve trained a model called ChatGPT that interacts in a conversational way. The dialogue format allows ChatGPT to answer follow-up questions, admit mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is a sister model to InstructGPT, which is trained to follow instructions in a prompt and provide a detailed response.

We’re excited to introduce ChatGPT to get user feedback and learn about its strengths and weaknesses. During the search preview, ChatGPT is free to use. Try it now at chat.openai.com.

It’s hard to say what’s wrong with the code without more context. Can you provide more information on what the code is supposed to do and what isn’t working as expected? Also, is this the whole code or just a part of it?

USER

is just a part of it – the error never comes up. I think something is wrong with the channel

CHATGPT

It’s hard to say without more information about what the code is supposed to do and what happens when it’s executed. One possible problem with the code you provided is that the resultWorkerErr channel is never closed, which means that the code will likely crash if the resultWorkerErr channel is never written to. This can happen if b.resultWorker does not return an error or if it is canceled before it has a chance to return an error.

To fix this problem, you can close the resultWorkerErr channel after writing to it. For example, you can add the following line of code after the line that sends the error on the channel:

close (resultworkerErr)

Again, it’s hard to say if this is the problem you’re experiencing without more information about the problem you’re seeing. Can you provide more details on what happens when you run the code?

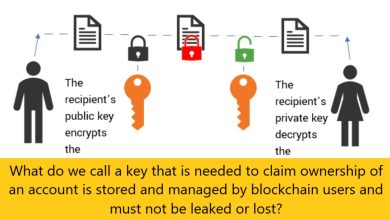

We trained this model using reinforcement learning from human feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. also trained a prototype using supervised fine-tuning: human AI trainers gave conversations in which they played both sides—the user and the AI assistant. We gave trainers access to written suggestions as a template to help them structure their responses.

To create a reward model for reinforcement learning, we needed to collect comparison data, which consisted of two or more typical responses ordered by quality. To collect this data, we had conversations that the AI trainers had with a chatbot. We randomly selected a form-written message, sampled several alternative completions, and had AI trainers rank them. With these reward models, we can fine-tune the model using Proximal Policy Optimization. We performed several iterations of this process.